Demo page for microphone array processing using Bayesian models |

Overview

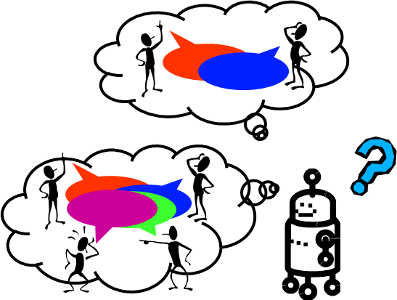

Auditory information processing techniques such as speech recognition and sound source classification are fundamental functions to enable intelligent behaviors for computers and robots on the basis of auditory information. Although these techniques typically assume a single sound source as the input, multiple sound sources are often observed in the actual environments; for example, in an indoor environment, a noise may come from a TV set or a vacuum cleaner while noisy traffic may interfere the observation of a sound source of interest. The existence of interfering sound sources results in a degradation of information quality such as degraded speech recognition results or sound classification quality.

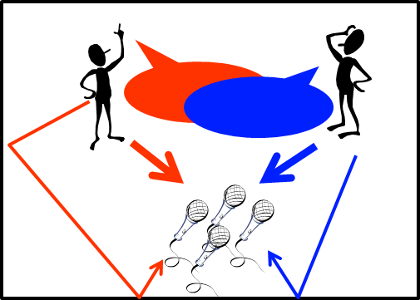

To cope with multisource situations, microphone array processing techniques are often used so as to realize a sound source separation function for the observed mixture of sound sources. While microphone array is capable of separating and localizing the constituent sound sources given the observed mixture of sound sources, existing methods using a microphone array have a limited capability to deal with the auditory uncertainties in the environment. Our research presented in this demonstration page develops Bayesian nonparametric microphone array processing methods that cope with the following three auditory uncertainties.

- Unknown number of sound sources: Many microphone array-based methods assume the number of sound sources in the observation is known. We developed a sound source separation and localization method capable of theoretically handling an arbitrary number of sound sources based on Bayesian nonparametrics to overcome the uncertainty of the source number.

- Reverberation: Audio signals observed in an indoor environment contain reverberation that results from the reflections of sounds on the walls and floor. Since reverberation can cause a severe degradation of sound source separation performance, the removal of reverberation, also known as dereverberation, function is often incorporated into the microphone array processing for the robustness against the reverberation. Existing dereverberation methods with a microphone array suffer from a limitation that the number of sources in the observation must be less than the number of microphones. This limitation can cause a conflict with the source number uncertainty. Our sound source separation and dereverberation method incorporates a Bayesian nonparametric model to overcome this limitation.

- Dynamic environments: Most microphone array-based techniques are designed for a situation where the relative position between the microphones and sound sources are fixed over time. We partly address this limitation by applying the Bayesian nonparametric source separation method in a dynamic environment where the sound sources or microphones may move. Here, we show some separation results using an audio mixture of two sound sources recorded by a microphone array embedded in a mobile robot.

1. Unknown number of sound sources |

2. Reverberation |

3. Dynamic environments |

1. Sound source separation in source number uncertainty

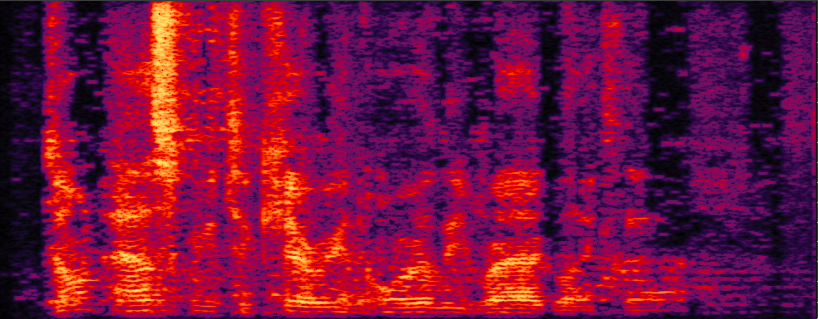

Two separation results with 4 channel microphone array are presented: (i) 2 sound sources, and (ii) 5 sound sources. Our method dispenses with any parameter tuning dependent on the number of sources. The same configuration is applied for both cases.Audio check is done with Chrome browser. Use of a headphone is recommended.

- Separation of 2 sources

Input mixture

Result audio (female)

Result audio (male)

- Separation of 5 sources

Input mixture

Result audio (-90 deg.) Result audio (-60 deg.) Result audio (0 deg.) Result audio (60 deg.) Result audio (90 deg.)

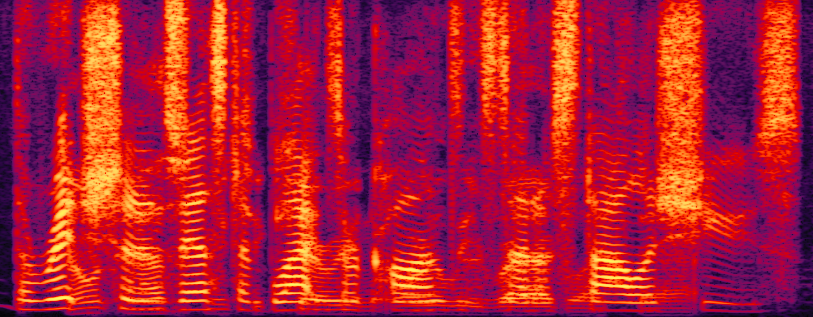

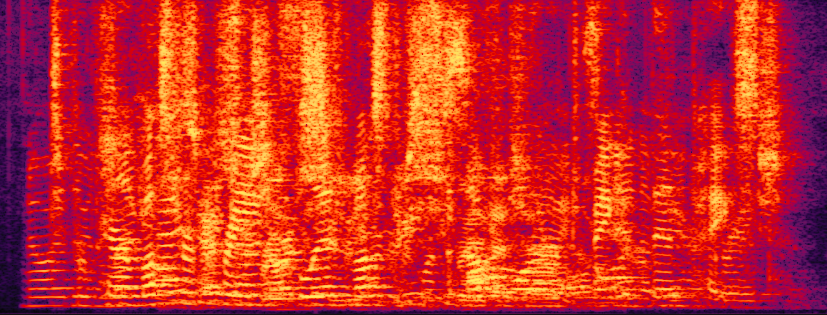

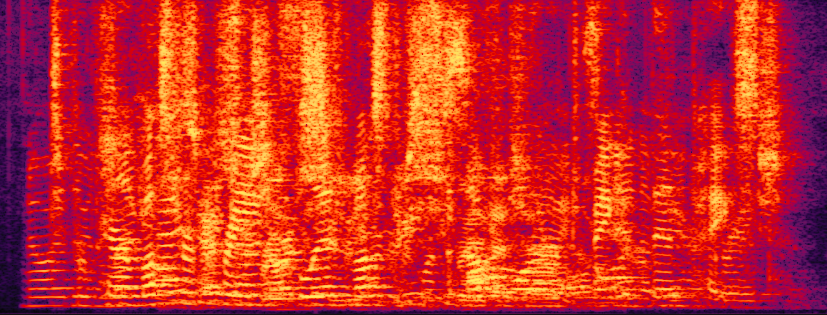

2. Sound source separation and dereverberation in source number uncertainty

Reverberation often degrades the separation quality. In our method, reverberation is modeled as a propagation of observed signals in the past time frames into the current time frame. In this part, the mixture has also 4 channels with two cases: (i) two sources and (ii) five sources. Results with and without (w/ and w/o) dereverberation are presented.- Results of 2 sources

- w/o dereverberation: echoes and reflection of the other sound remains in the audio signal.

Reverberant input mixture

Result audio (female)

Result audio (male)

- w/ dereverberation: reflected sound is suppressed.

Input mixture (the same as above)

Result audio (female)

Result audio (male)

- w/o dereverberation: echoes and reflection of the other sound remains in the audio signal.

- Results of 5 sources

- w/o dereverberation

Reverberant input mixture

Result audio (-90 deg.) Result audio (-60 deg.) Result audio (0 deg.) Result audio (60 deg.) Result audio (90 deg.) - w/ dereverberation

Input mixture (the same as above)

Result audio (-90 deg.) Result audio (-60 deg.) Result audio (0 deg.) Result audio (60 deg.) Result audio (90 deg.)

- w/o dereverberation

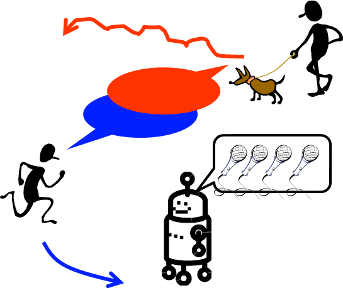

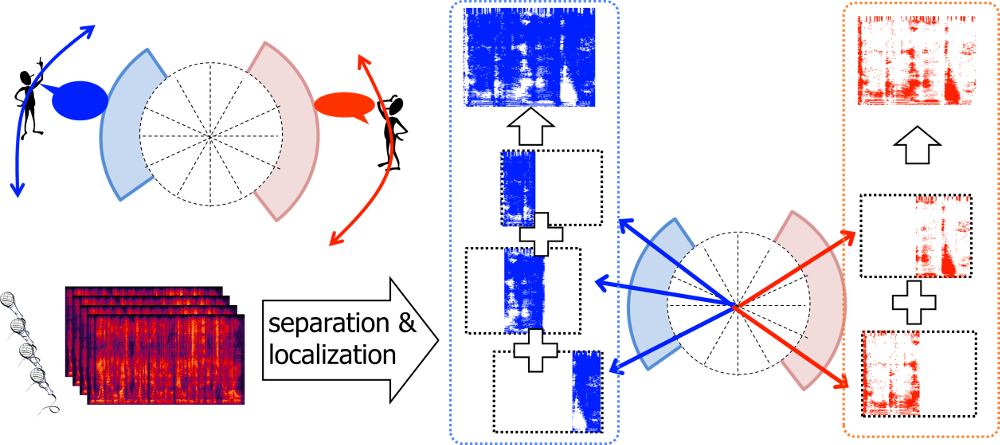

3. Sound source separation with a moving microphone array

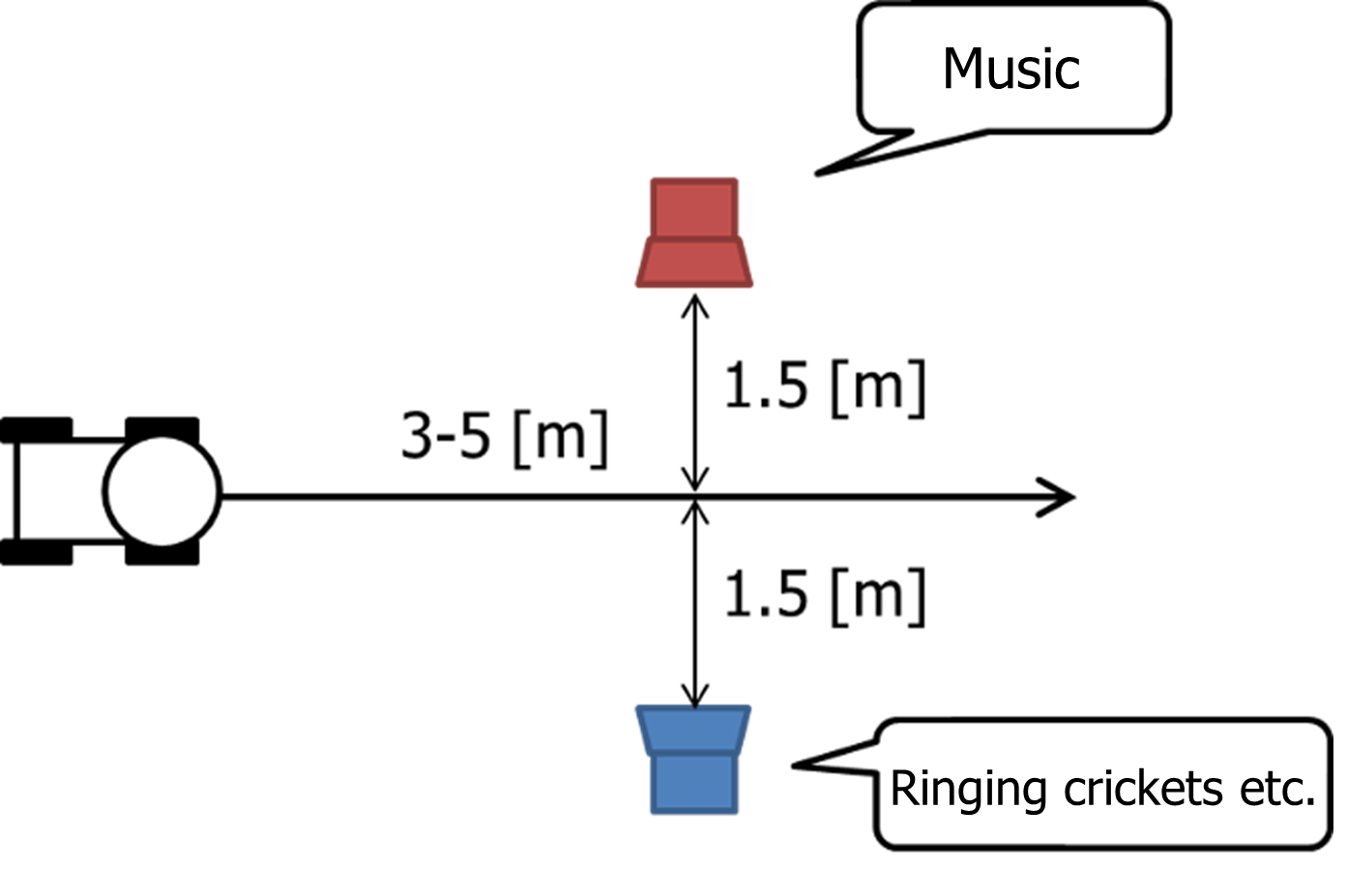

We tackle the sound source separation problem in a dynamic environment where the relative positions between the sound sources and microphones change over time. The uncertainty about the dynamic environment is partly addressed in that each source move in a disjoint direction range as illustrated below. For separating sound sources in a dynamic environment, we apply the Bayesian nonparametric separation and localization method, which splits the moving sound sources into segments along the time axis. In order to reconstruct each source signal, we merge the separated segments that are localized within a respective direction range of each source.

Overview of separation in a dynamic environment: blue an red sound sources move in disjoint direction ranges. |

Example: a mobile robot passes between two loudspeakers. The left speaker plays a music whereas the right speaker plays calls of frogs and crickets etc. |

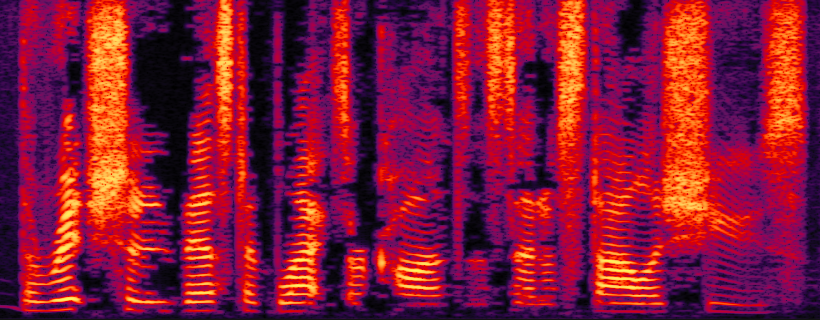

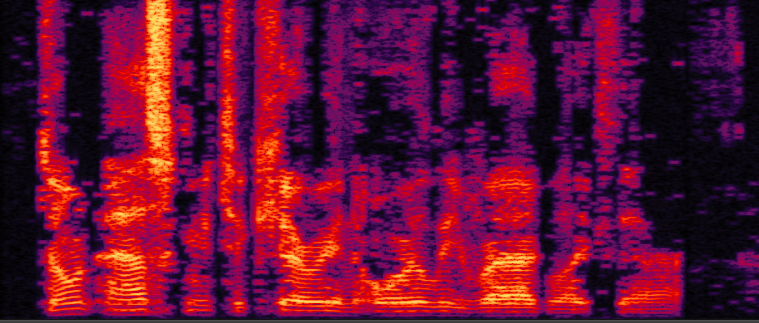

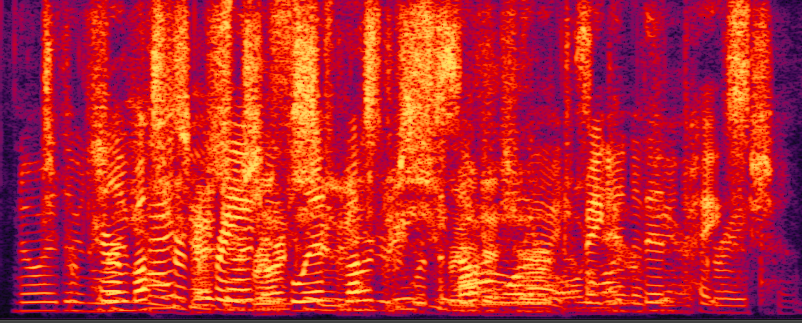

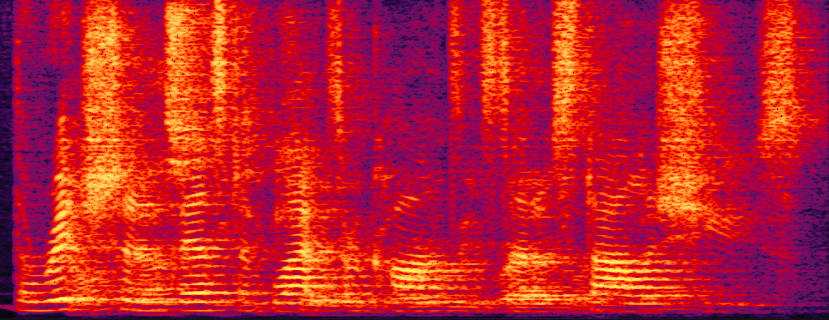

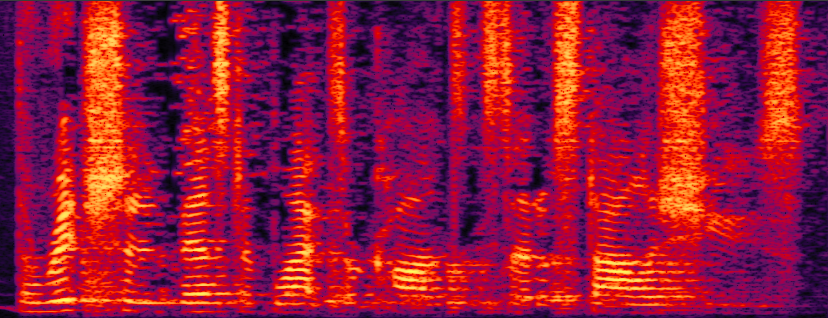

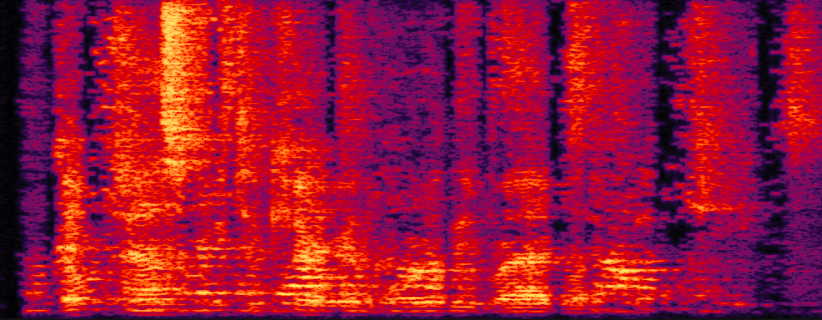

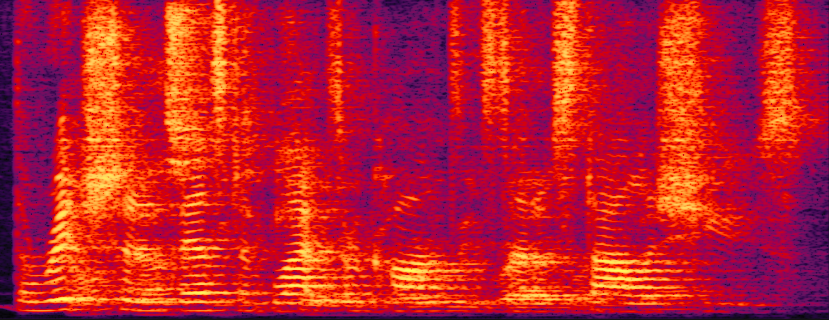

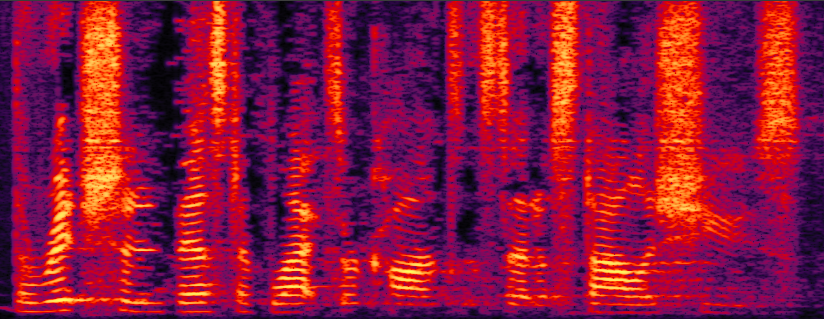

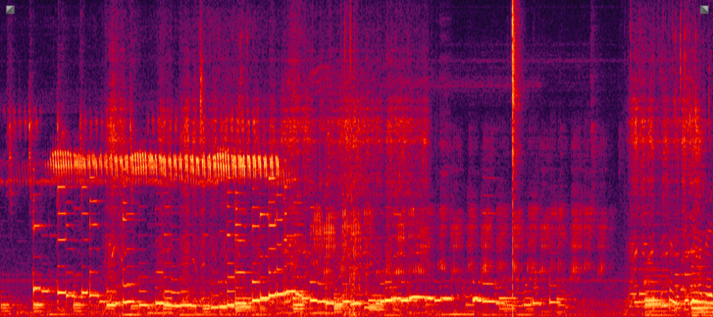

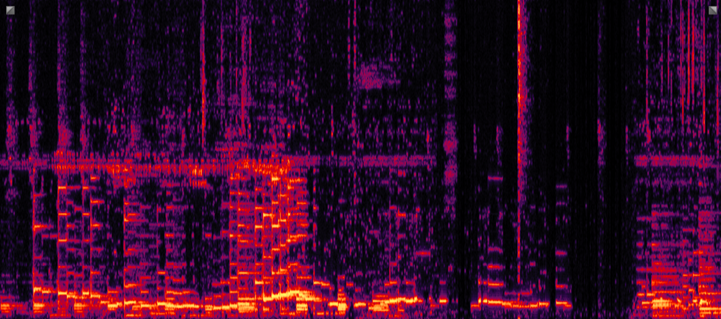

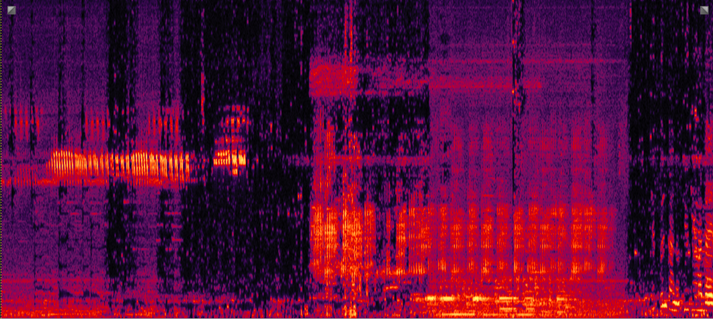

Separated results of the mobile robot example.

Observed audio (moving during 3-8 sec)

|

Left: music

|

Right: frogs and crickets

|

Related publications

- Takuma Otsuka, Katsuhiko Ishiguro, Hiroshi Sawada, Hiroshi G. Okuno: "Bayesian Nonparametrics for Microphone Array Processing," IEEE Transactions on Audio, Speech and Language Processing, Vol. 22, No. 2, pp. 493-504, 2014. 10.1109/TASLP.2013.2294582

- Takuma Otsuka, Katsuhiko Ishiguro, Hiroshi Sawada, Hiroshi G. Okuno: "Unified Auditory Functions based on Bayesian Topic Model," Proceedings of IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS-2012), pp.2370-2376, 2012.

- Takuma Otsuka, Katsuhiko Ishiguro, Hiroshi Sawada, Hiroshi G. Okuno: "Bayesian Unification of Sound Source Localization and Separation with Permutation Resolution," Proceedings of the Twenty-Sixth AAAI Conference on Artificial Intelligence (AAAI-12), pp.2038-2045, 2012.