CHALLENGES IN DEPLOYING A MICROPHONE ARRAY TO LOCALIZE AND SEPARATE SOUND SOURCES IN REAL AUDITORY SCENES

Y. Bando, T. Otsuka, K. Itoyama, K. Yoshii, Y. Sasaki, S. Kagami, and H. G. Okuno

Abstract

Analyzing the auditory scene of real environments is challenging partly because an unknown number and type of sound sources are observed at the same time and partly because these sounds are observed on a significantly different sound pressure level at the microphone. These are difficult problems even with state-of-the-art sound source localization and separation methods. In this paper, we exploit two such methods using a microphone array: (1) Bayesian nonparametric microphone array processing (BNP-MAP), which is capable of separating and localizing sound sources when the number of sound sources is unspecified, and (2) robot audition software "HALK" tackles the fourth challenge and is capable of separating and localizing in real time. Through experimentation, we found that BNP-MAP is more robust against differences in the sound pressure levels of the source signals and in the spatial closeness of source po- sitions. Experiments analyzing real scenes of human conversations recorded in a big exhibition hall and bird calling recorded at a natural park demonstrate the efficacy and applicability of BNP-MAP.

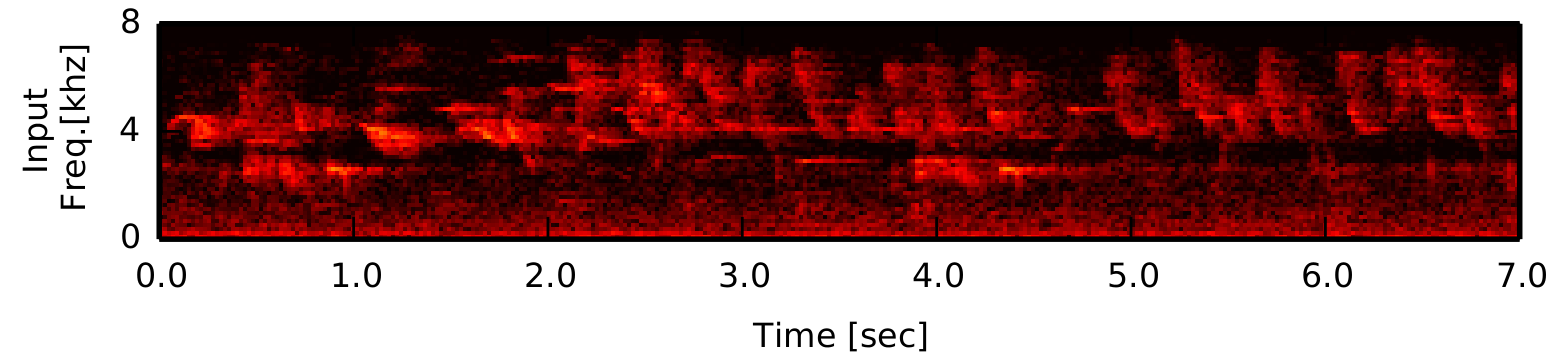

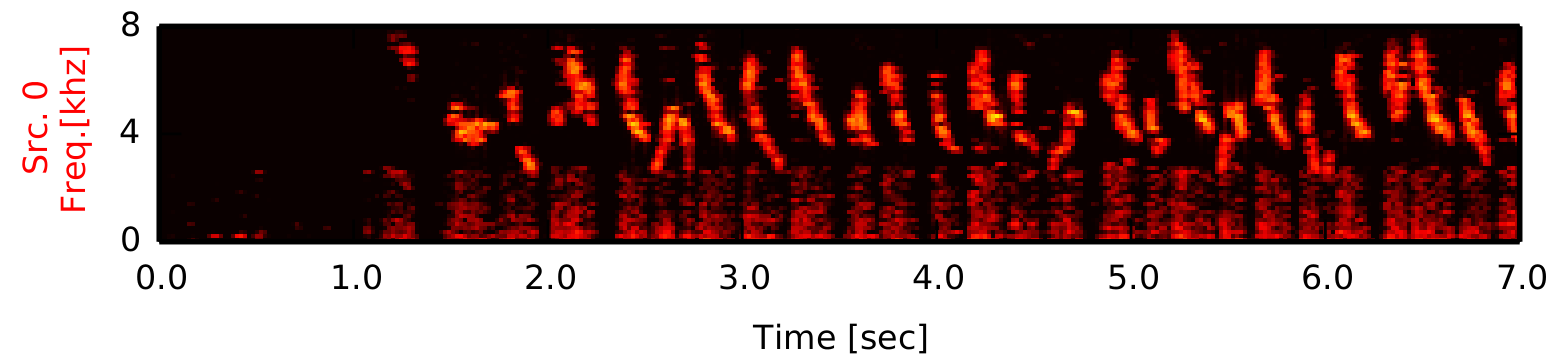

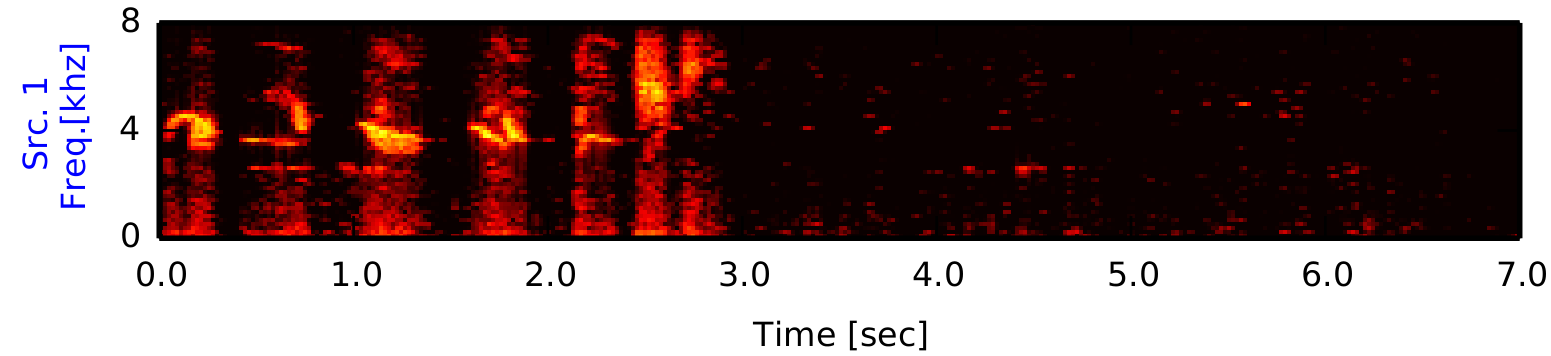

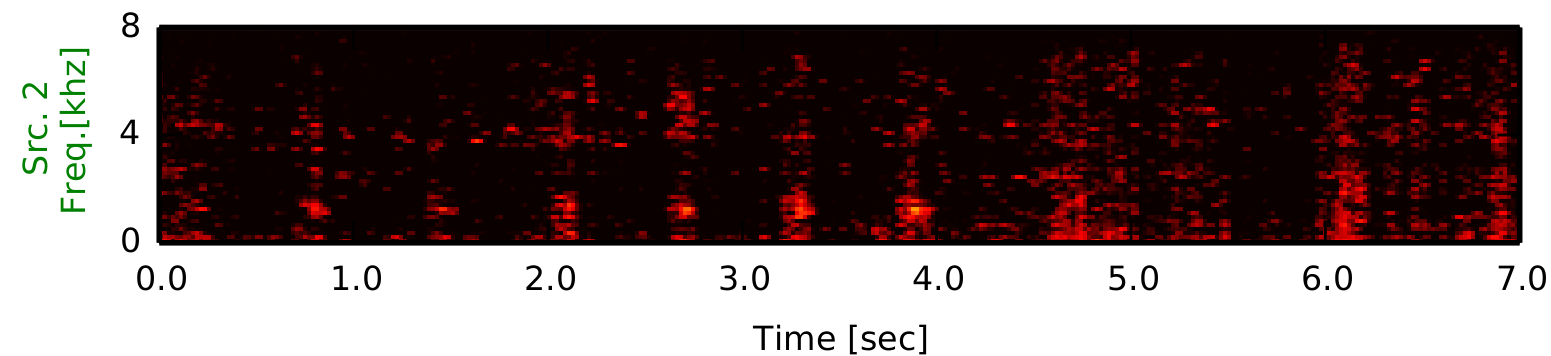

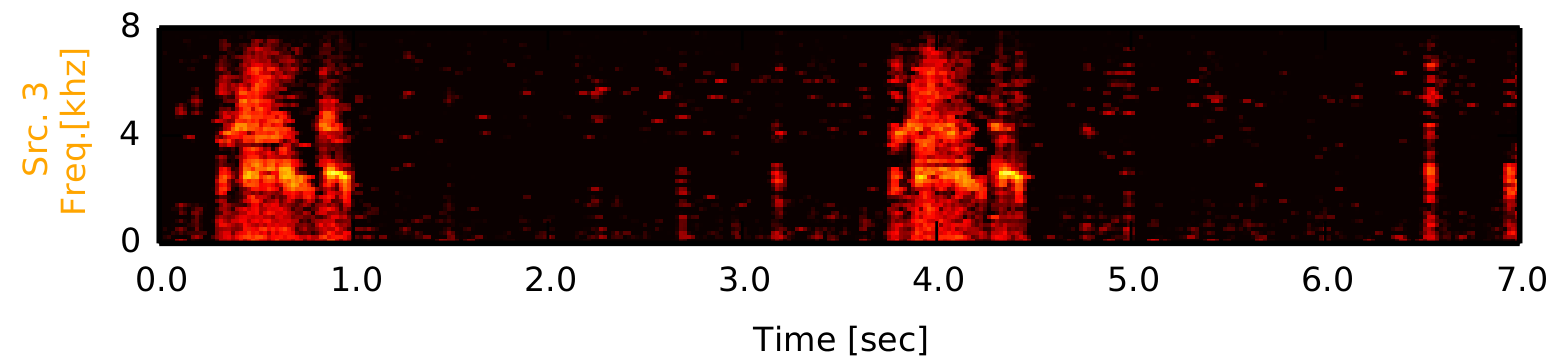

Separation and localization results of bird choruses with BNP-MAP

The left chart indicates the directions and powers of separated sounds. The right figures indicate the spectrograms of separated sounds.

- Src. 0: chorus of Zosterops japonicus

- Src. 1: chorus of Ficedula narcissina

- Src. 2: chorus of a raven

- Src. 3: chorus of Hypsipetes amaurotis

Separated sounds of bird choruses with BNP-MAP

Separated sounds from 00:25 of the whole signal.

[1] T. Otsuka, K. Ishiguro, H. Sawada, and H. G. Okuno, "Bayesian nonparametrics for microphone array processing," IEEE/ACM

Trans. on Audio, Speech and Language Processing, vol. 22, no. 2, pp. 493–504, 2014.

[2] K. Nakadai, T. Takahashi,

H. G. Okuno, H. Nakajima, Y. Hasegawa, and H. Tsujino, "Design and implementation of robot audition system "HARK","

Advanced Robotics, vol.4, no. 5–6, pp. 739–761, 2010.